How to Build a Modern Data Architecture

By Tony Velcich

May 23, 2022

Migrate, manage, and accelerate your move to the cloud.

We asked participants at a recent webinar in which we discussed functional strategies for a modern data architecture two key questions: How would you describe the current state of your cloud migrations journey? What challenges have you faced during your migrations?

Their responses were not surprising. Of the 166 people who attended, 44 percent said that they are in the process of migrating their on-premises data to the cloud, and 75 percent said that the biggest challenge they face is the complexity of their current environment.

Cloud migrations are not easy. And most go over budget because:

- On-premises environments are complex and hard to map, even to the best cloud topology.

- On-prem environments often lack in-house expertise.

- Migrations are mostly done manually, with little automation.

So why are more and more organizations migrating their data to the cloud at all? Major reasons have to do with the fact that workloads are moving to the cloud en masse due to the need for both higher business agility and access to modern software for AI and machine learning. In addition, companies are looking to lower optimization costs but at the same time, are finding other means of support because support for their older on-premises software is coming to, or has already come to, an end.

This article outlines three key points that will help data leaders move their data to and modernize it in the cloud: a framework for accelerating your move to Amazon Web Services (AWS); an approach to moving data to the cloud, fast; and keys to executing an effective assessment.

AWS: Build a modern data architecture

A modern data architecture addresses the idea that taking a one-size-fits-all approach to analytics eventually leads to compromises. “It is not simply about integrating a data lake with a data warehouse, but rather about integrating a data lake, a data warehouse, and purpose-built stores, enabling unified governance and easy data movement,” says Depankar Ghosal, principal architect, Global Special Practice at AWS.

Ghosal further explains that with a modern data architecture on AWS, customers can rapidly build scalable data lakes; use a broad and deep collection of purpose-built data services; ensure compliance via unified data access, security, and governance; scale their systems at a low cost without compromising performance; and easily share data across organizational boundaries, enabling customers to make decisions at scale with speed and agility.

WANdisco: A data-first approach delivers AI insights faster

As we’ve often mentioned, traditional approaches to data migration don’t work. Such approaches are based on an application-first mindset. While this made sense for OLTP applications, it is not conducive to data lake migrations where use cases are for ad-hoc analytics or new AI and machine learning development. An application-first approach also leads to big-bang migrations, which take longer to complete and result in projects running 60 percent to 80 percent over time and/or budget.

Data is the most important aspect of your data lake migration. Without data being available, you can’t take full advantage of services and capabilities of your cloud environment. “The most interesting aspect of a cloud migration is the fact that people are unfamiliar with what it takes to move their data at scale to the cloud,” says Paul Scott-Murphy, CTO, WANdisco. “It’s a novel thing for them to be doing, so traditional approaches to moving data or planning a migration between environments may not be applicable to moving to the cloud.” Paul adds that putting data, not applications, at the center of the cloud migration process requires the right technologies that support this approach, enabling you to begin performing AI, machine learning, and analytics in the cloud much sooner.

Critical components of cloud data migration include:

- Performance — moving data fast and at larger scale

- Predictability — determining when your migration will be completed so that you can properly plan your workload migrations to take advantage of the cloud environment

- Automation — making this process as straightforward and simple as possible will give you the flexibility required to migrate and optimize workloads effectively in a cloud environment

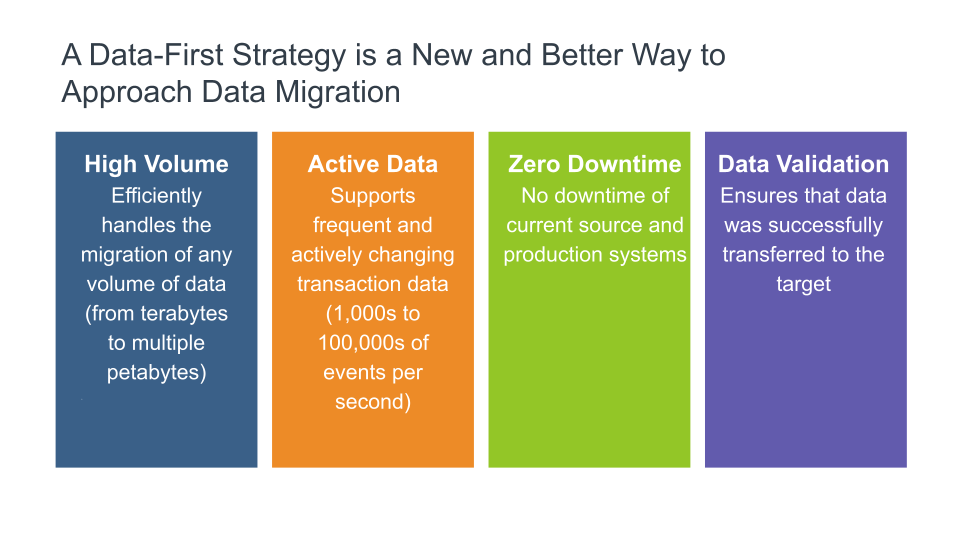

These components are what a data-first approach is all about. A data-first strategy can help ensure that the data you move to the cloud is available to data scientists in days, not months, and can further ensure faster time-to-insights, provide flexibility for workload migration, and optimize existing workloads to leverage new cloud capabilities. A successful data-first approach must address the following:

High volume. Efficiently handles migration of any volume of data, from terabytes to multiple petabytes

Active data. Supports frequent and actively changing transaction data (thousands to hundreds of thousands of events per second)

Zero downtime. No downtime of current source and production systems

Data validation. Ensures that the data was successfully transferred to the target

Web-hosting company GoDaddy is a great example that highlights the benefit of moving beyond just migration and into data modernization at scale. Using WANdisco LiveData Migrator, GoDaddy quickly moved petabytes of data in a highly used Hadoop cluster to AWS S3 with zero downtime to business operations. By automating the migration with an eye toward modernization, the GoDaddy data science team was able to modernize its data infrastructure in AWS and rapidly deliver both valuable insights and advanced analysis to business stakeholders, all while decreasing overall infrastructure costs.

Unravel: 4 steps to accelerating workload migrations

A modern data architecture and a data-first strategy are just two elements of cloud migration and modernization. How quickly the move is done is also key. “Migration doesn’t happen overnight; it all starts with careful planning and then happens in a phased fashion over time,” says Shivnath Babu, CTO and co-founder, Unravel Data.

The following four steps are recommendations from Unravel that can help data leaders accelerate the movement of data and workloads to the cloud:

- Assess: Understand the current on-premises estate and how it will map to the cloud, and then create a total cost of ownership model.

- Plan: Choose which workloads to move to the cloud, size the cloud clusters, and create migration sprints.

- Test, fix, verify: Test migrated workloads for correctness, performance, and cost.

- Optimize, manage, scale: Conduct ongoing governance, troubleshooting, and optimization efforts.

The Unravel team worked with a domain registrar and web hoster to conduct a data-driven migration assessment around which they could build a plan. The client initially underwent a weeks-long manual exercise that resulted in incomplete information when it came to estimated versus actual number of source apps and scripts.

Unravel partnered with AWS to help the web hoster assess and plan the project, as well as estimate total cost of ownership. Furthermore, based on the overall assessment, Unravel was able to both increase the data migration to the cloud by 40 percent and reduce the time it took to move each application by more than 20 hours.

The way forward for data modernization

The cloud is all about automation, self-service, and democratization (providing data to everyone in an easy-to-consume fashion). However, putting all of this responsibility onto your data engineers and data scientists might not be the right approach because migration projects are complex and need careful planning across all stakeholders. So it’s important to get the right tools and experts on board to get your data strategy off to the right start.

Watch the webinar replay here.

Tony Velcich

Sr. Director, Product Marketing

Tony Velcich

Sr. Director, Product Marketing

Tony is an accomplished product management and marketing leader with over 25 years of experience in the software industry. Tony is currently responsible for product marketing at WANdisco, helping to drive go-to-market strategy, content and activities. Tony has a strong background in data management having worked at leading database companies including Oracle, Informix and TimesTen where he led strategy for areas such as big data analytics for the telecommunications industry, sales force automation, as well as sales and customer experience analytics.